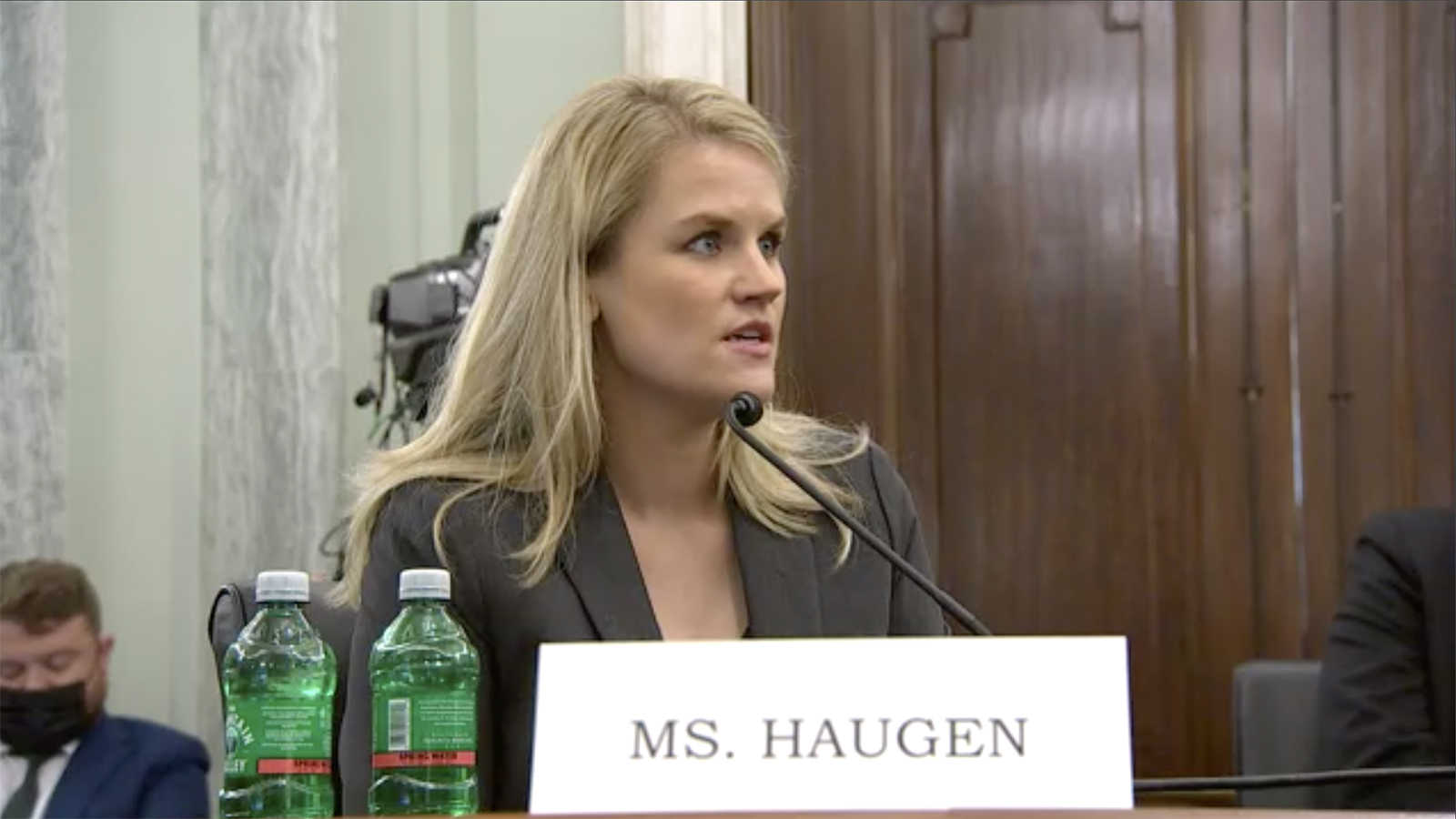

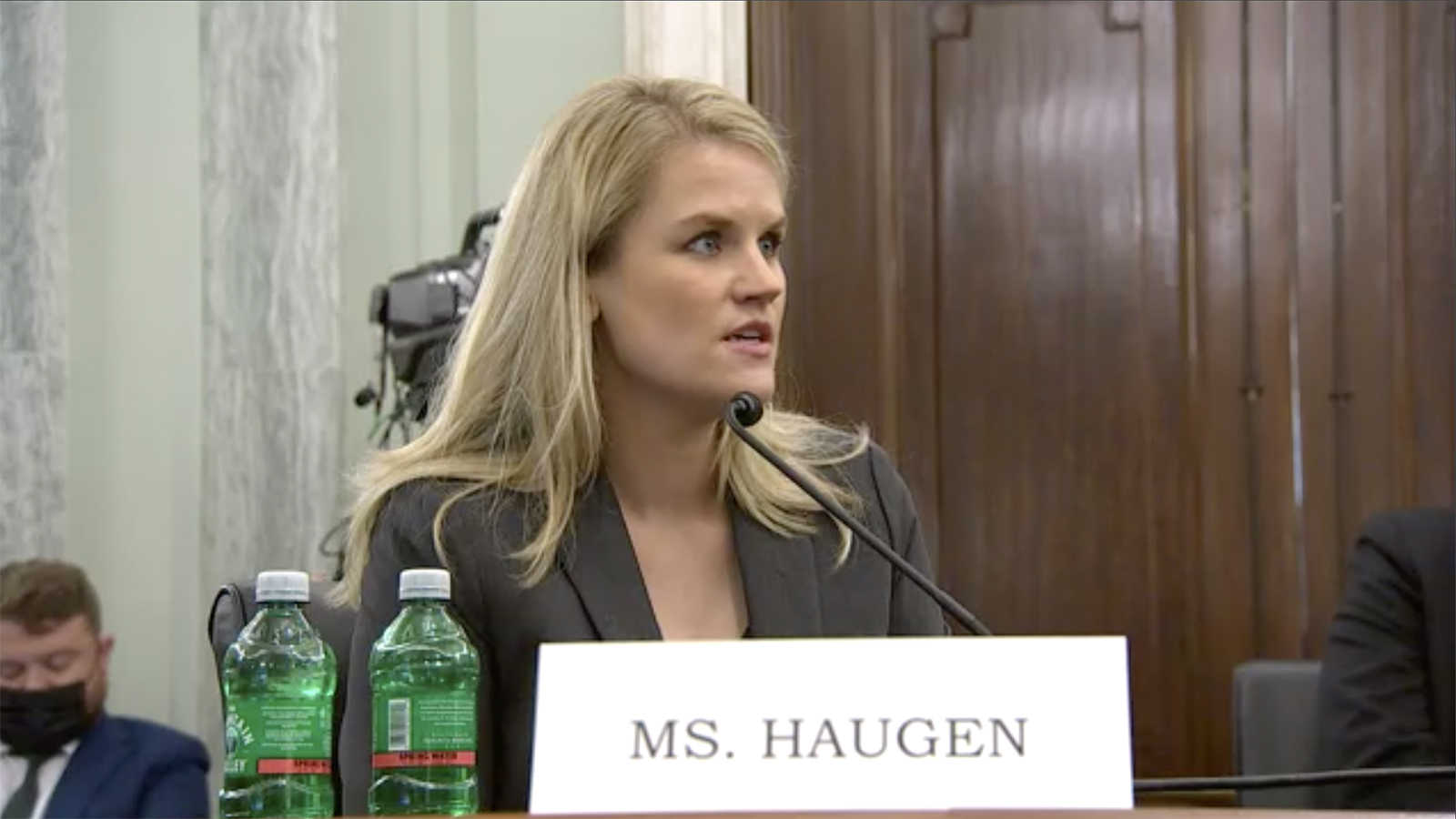

Whistleblower Frances Haugen, 37, a former product manager on Facebook's civic integrity team, testified at an internet safety hearing on Capitol Hill on Tuesday and said "they [Facebook] have put their immense profits before people."

She told senators at a Washington hearing that Facebook's leaders know how to make their products safer but won't, she says

She said Facebook weakens democracy. "They have put their immense profits before people"

Facebook's products "harm children, stoke division and weaken our democracy."

Democrats and Republicans expressed their concern about Facebook in their opening remarks.

Facebook has rejected her claims, saying it has spent significant sums of money on safety.

The highly anticipated testimony comes a day after a massive Facebook outage, which saw services down for six hours and affected billions of users globally.

A big part of Haugen's argument is that the only people who really understand Facebook's inner workings are its employees.

"Facebook has a culture that emphasises that insularity is the path forward," she told the members at the hearing earlier. "That if information is shared publicly, it will be misunderstood," she says.

If you follow Haugen's logic, this inward-looking culture is precisely why Congressional oversight of the social media giant is needed, some say.

Haugen is arguing that regulation may even make Facebook "more profitable over the long-term".

In response to questions from Democratic Senator John Hickenlooper, Haugen says "if it wasn’t as toxic, less people could quit it" - though that isn't something we can test.

Haugen also continued her comparisons between Facebook and the tobacco industry.

"Only about 10% of people who smoke ever get lung cancer,” she said. “So [at Facebook] there’s the idea that 20% of your users can be facing serious mental health issues and that’s not a problem."

Haugen, whose last role at Facebook was as a product manager supporting the company’s counter-espionage team, was asked whether Facebook is used by “authoritarian or terrorist-based leaders” around the world.

She said such use of the platforms is “definitely” happening, and that Facebook is “very aware” of it.

“My team directly worked on tracking Chinese participation on the platform, surveilling, say, Uyghur populations, in places around the world. You could actually find the Chinese based on them doing these kinds of things,” Haugen said. “We also saw active participation of, say, the Iran government doing espionage on other state actors.”

She went on to say that she believes, “Facebook’s consistent understaffing of the counterespionage information operations and counter terrorism teams is a national security issue, and I’m speaking to other parts of Congress about that … I have strong national security concerns about how Facebook operates today.”

Sen. Richard Blumenthal suggested that these national security concerns could be the subject of a future subcommittee hearing.

Who is the whistleblower?

Frances Haugen has been testifying Tuesday.

Here's what you need to know about the former Facebook employee taking centre stage Tuesday.

Who is she?

The 37-year-old unveiled herself on Sunday as the person behind a series of surprise leaks of internal Facebook documents.

Haugen told CBS News she had left Facebook earlier this year after becoming exasperated with the company.

Why?

She was a product manager on the civic integrity team until it was disbanded a month after the 2020 presidential election.

"Like, they basically said, ‘Oh good, we made it through the election...We can get rid of Civic Integrity now.’ Fast forward a couple months, we got the insurrection."

Facebook's Integrity chief has since contested this, saying it wasn't disbanded but "integrated into a larger Central Integrity team".

What did she do?

Before she left the company, Haugen copied a series of internal memos and documents.

She has shared them with the Wall Street Journal, which has been releasing the material in batches over the last three weeks - sometimes referred to as the Facebook Files.

Haugen says these documents prove the tech giant repeatedly prioritised "growth over safety".

Profits over people

If there is an overall theme so far - this is it.

According to Haugen, Facebook routinely resolves conflicts between its bottom line and the safety of its users in favour of its profits.

"The company's leadership knows how to make Facebook and Instagram safer, but won't make the necessary changes because they have put their astronomical profits before people," she says.

The buck stops with Zuck

Throughout her remarks, Haugen has made clear that Facebook chief Mark Zuckerberg has wide-ranging oversight of his company and maintains ultimate control of all key decisions.

"The buck stops with Mark," she says. "There is no one currently holding Mark accountable but himself."

Teen girls targeted

Senators have devoted much of their time to the harm posed by Facebook and Instagram to young people, especially teenage girls.

Several have pointed to research that suggests both sites worsen teens' body image and promote eating disorders.

Lawmakers cited Facebook's own data, published by the Wall Street Journal last month, which found that 32% of teen girls said that when they feel bad about their bodies, Instagram makes them feel worse.

Facebook fires back

Andy Stone, Facebook's policy communications director, is pushing back live on Twitter.

He wrote that Haugen is asked about topics she did not work directly on, including child safety and Instagram.

But, as others online note, she has come armed with Facebook's own documents to back up her testimony.

Click here to watch the hearing

Whistleblower: Transparency and dissolving an engagement-based ranking system would improve Facebook

Facebook whistleblower Frances Haugen detailed how the social media platform could become a better environment, saying it could introduce transparency measures and small frictions, and move away from the "dangerous" engagement-based ranking system.

This would recenter the methods of amplification and won't focus not on "picking winners or losers in the marketplace of ideas," she told senators on Tuesday.

"On Twitter, you have to click through on a link before you reshare it. Small actions like that friction don’t require picking good ideas and bad ideas, they just make the platform less twitchy, less reactive. Facebook’s internal research says each one of these small actions, dramatically reduces misinformation, hate speech and violence-inciting content on the platforms," Haugen said.

She advocated for chronologically-ordered content instead.

"I'm a strong proponent of chronological ranking, ordering by time, with a little bit of spammed emotion. Because I think we don't want computers deciding what we focus on, we should have software that is human-scaled, or humans have conversations together, not computers facilitating who we get to hear from," Haugen said.

In addition, she encouraged a privacy-conscious regulatory body working with academics, researchers and government agencies to "synthesize requests for data" because currently, the social media giant is not obligated to disclose any data.

"Even data as simple as what integrity systems exist today and how well do they perform?" she suggested. "Basic actions like transparency would make a huge difference."

What does engagement-based ranking system mean?

Facebook, like other platforms, uses algorithms to amplify or boost content that receives engagement in the form of likes or shares or comments. In Facebook’s view, this helps a user “enjoy” their feed, Haugen explained.

“The dangers of engagement-based ranking are that Facebook knows that content that elicits an extreme reaction from you is more likely to get a click, a comment or reshare," which aren't necessary for the user's benefit, she added. "It's because they know that other people will produce more content if they get the likes and comments and reshares. They prioritize content in your feed so that you will give little hits of dopamine to your friends so they'll create more content.”

Whistleblower: Facebook should declare "moral bankruptcy" and ask Congress for help

Former Facebook employee Frances Haugen said it's time for Facebook to declare "moral bankruptcy" and admit that they have a problem and they need help solving it.

She said that since Facebook is a "closed system" the company has "had the opportunity to hide their problems."

"And like people often do when they can hide their problems, they get in over their heads," Haugen added.

She said that Congress should step in and say to the company, "You don't have to hide these things from us" and "pretend they're not problems."

She believes that Congress should give Facebook the opportunity to "declare moral bankruptcy and we can figure out how to fix these things together."

Asked to clarify what she meant by "moral bankruptcy," Haugen said she envisioned a process like financial bankruptcy where "they admit did something wrong" and there is a "mechanism" to "forgive them" and "move forward."

"Facebook is stuck in a feedback loop that they cannot get out of...they need to admit that they did something wrong and that they need help to solve these problems. And that's what moral bankruptcy is," she said.

Whistleblower: Facebook's artificial intelligence systems only catch "very tiny minority" of offending content

Facebook's artificial intelligence (AI) systems "only catch a very tiny minority of offending content," whistleblower Frances Haugen told congressional lawmakers on Tuesday.

"The reality is that we've seen from repeated documents within my disclosures, is that Facebook's AI systems only catch a very tiny minority of offending content. And best case scenario, and the case of something like hate speech, at most they will ever get 10 to 20%. In the case of children, that means drug paraphernalia ads like that, it's likely if they rely on computers and not humans, they will also likely never get more than 10 to 20% of those ads," Haugen explained.

She said the reason behind it is Facebook's "deep focus on scale."

"So scale is, 'can we do things very cheaply for a huge number of people?' Which is part of why they rely on AI so much. It's possible none of those ads were seen by a human," she said.

Facebook struggles to tackle problems because it's "understaffed," whistleblower says

Facebook is extraordinarily profitable, so it is intriguing to hear Frances Haugen repeatedly refer to the company as "understaffed." She said this staffing shortage contributes to a vicious cycle of platform-wide problems.

"Facebook has struggled for a long time to recruit and retain the number of employees it needs to tackle the large scope of projects that it has chosen to take on," Haugen said, emphasizing the word "chosen."

"Facebook is stuck in a cycle where it struggles to hire; that causes it to understaff projects; which causes scandals; which then makes it harder to hire," she said.

In a later exchange, Haugen described the following "pattern of behavior:" Often, she said, "problems were so understaffed that there was kind of an implicit discouragement from having better detection systems." For example, "my last team at Facebook was on the counterespionage team within the threat intelligence org, and at any given time, our team could only handle a third of the cases that we knew about. We knew that if we built even a basic detecter, we would likely have many more cases."

It's a twist on the adage about being "too big to fail." Longtime tech reporter Craig Silverman observed that Haugen was calling Facebook "too big to staff."

"The kids who are bullied on Instagram, the bullying follows them home. It follows them into their bedrooms. The last thing they see before they go to bed at night is someone being cruel to them. Or the first thing in the morning is someone being cruel to them. Kids are learning that their own friends, people who they care about, are cruel to them," she said.

This could potentially impact their domestic relationships as they grow older, Haugen added.

"Facebook's own research is aware that children express feelings of loneliness and struggling with these things because they can't even get support from their own parents" who have never had this experience with technology, the former Facebook employee added. "I don't know understand how Facebook can know all these things and not escalate it to someone like Congress for help and support in navigating these problems."

credit: BBC/CNN